K8s-cluster-install

基础服务

| 名称 | 说明 |

|---|---|

| kubernetes(k8s) | k8s集群 |

| kubeadm | k8s集群部署工具 |

| kubesphere(青云) | 容器管理平台 |

| Prometheus-Operator | k8s监控平台 |

| Grafana Loki | 日志收集平台 |

| jaeger | 分布式链路追踪 |

| skywalking | 分布式链路追踪 |

| KubeKey | kk一键安装k8s |

k8s组件概览

控制平面组件

- kube-apiserver:API 服务器是 Kubernetes 控制面的前端,各组件通讯中转站,接收外部请求。

- etcd:兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库。

- kubu-scheduler:节点资源调度(调度决策考虑的因素包括单个 Pod 和 Pod 集合的资源需求、硬件/软件/策略约束、亲和性和反亲和性规范、数据位置、工作负载间的干扰和最后时限。)

- kube-controller-manager:控制器进程组件(节点、任务、端点、账户和令牌控制器)

- cloud-controller-manager:云平台交互控制器(节点、路由、服务控制器)非必需

Node组件(每个节点上运行)

- kubelet:维护节点状态等

- kube-proxy:负责节点网络代理

容器/插件(DNS必须)/Web(管理界面)/监控/日志

架构模式

- master节点:包含kube-apiserver、kubu-scheduler、kube-controller-manager组件,主要用于管理集群。

- etcd节点:包含etcd组件,存储重要的集群有状态信息

- worker节点:工作节点,主要用于运行业务服务容器等

etcd和master分开部署和部署到一台区别?

高可用

- Master的kube-apiserver、kube-controller-mansger和kube-scheduler服务至少三个结点的多实例方式部署。

- ETCD至少以3个结点的集群模式部署。

- 负载均衡 Load Balance使用双机热备,向Node暴露虚拟IP作为入口地址,供客户端访问。

高安全

- Master、ETCD集群启用基于CA认证的HTTPS安全机制,Master启用RBAC授信机制。

快速部署k8s青云集群

部署步骤

1 | -- 所有机器执行 |

all in one部署

all in one部署属于单机部署,是所有东西部署到一台机器,适合实验环境。

注意事项:

- 虚拟机需要设置内存8G以上,cpu设置8个虚拟cpu。

- 每个kk命令执行前都执行下

export KKZONE=cn命令,解决网络问题,该命令只对下个命令生效。 - 安装最好不要选择最新的版本,选择最新的前一个版本,因为国内资源也许没有更新这么快,导致一些资源下载失败。

组件介绍

组件安装日志查看命令kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-installer -o jsonpath='{.items[0].metadata.name}') -f

或者

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

组件启用分安装时启用和安装后启用

安装时启用

1

2

3#下载https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

#修改kubesphere-installer.yaml然后在里面启用组件

kubectl apply -f cluster-configuration.yaml安装后启用

命令模式:执行

kubectl -n kubesphere-system edit clusterconfiguration ks-installergraph LR A[集群管理] --> B[自定义资源CRD] --> C[clusterconfiguration] --> D[ks-installer] --> E[编辑配置启用组件] --> F[服务组件查看组件状态]

devops

- 流水线队列中,检查是否设置ci节点,集群设置标签

node-role.kubernetes.io/worker设置ci值 - 工作台–>企业空间–>DevOps工程

- 自定义 Jenkins Agent,在集群管理->集群->配置中心->配置->

jenkins-casc-config添加自定义镜像的配置,然后登陆 Jenkins 重新加载(新版本也许可以不用),最后在jenkins部署脚本就可以使用对应的agent.node.lable使用自定义镜像编译了。

logging(monitoring)

- 日志监测

Istio(servicemesh)

- 服务网格

metrics_server

资源监控

1

2#开启才有的命令

kubectl top node

常见错误

安装kubesphere提示如下错误

1

2ERRO[16:03:26 CST] Failed to exec command: sudo -E /bin/sh -c "/usr/local/bin/kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/ssl/etcd/ssl/ca.pem --from-file=etcd-client.crt=/etc/ssl/etcd/ssl/node-k8s-etcd-242.14.pem --from-file=etcd-client.key=/etc/ssl/etcd/ssl/node-k8s-etcd-242.14-key.pem"

error: error reading /etc/ssl/etcd/ssl/node-k8s-etcd-242.14.pem: no such file or directory: Process exited with status 1 node=10.255.242.10解决:

1

2

3

4# 修改所有member开头的为node

cp /etc/ssl/etcd/ssl/member-k8s-etcd-242.14-key.pem /etc/ssl/etcd/ssl/node-k8s-etcd-242.14-key.pem

# 修改完再次执行部署脚本

./kk create cluster -f config-sample.yaml | tee kk.log在worker节点执行KK提示如下错误

1

2

3

4[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

error execution phase upload-certs: error uploading certs: error creating token: timed out waiting for the condition

....

Failed to get cluster status: Failed to upload kubeadm certs: Failed to exec command: sudo -E /bin/sh -c "/usr/local/bin/kubeadm init phase upload-certs --upload-certs"解决:检查 controlPlaneEndpoint配置的负载均衡服务是否正常,lb正常还是不成功,先将负载设置成master节点的ip,后面部署好了再添加负载,修改负载节点过后执行

./kk delete cluster -f config-sample.yaml在执行安装命令,不然之前安装存的还是旧的负载均衡。流水线添加节点报错

1

java.net.ProtocolException: Expected HTTP 101 response but was '400 Bad Request'

解决:在

jenkins-casc-config添加的新节点配置错误,最好的解决方案是复制之前的模版,修改所有名称,例如替换所有nodejs为nodejs1415,不要采用继承模式,可能新版本才支持。访问某个页面提示

Internal Server Error1

Internal Server Error: "/apis/clusters/sim-1/apiextensions.k8s.io/v1beta1/customresourcedefinitions/clusterconfigurations.installer.kubesphere.io": http2: invalid Connection request header: ["upgrade"]

解决:在负载均衡服务器(keepalived)的nginx配置添加如下

1

2

3

4

5

6

7

8

9

10

11# /apis/clusters/sim-1/apiextensions.k8s.io和错误异常的地址对应

location /apis/clusters/sim-1/apiextensions.k8s.io {

proxy_http_version 1.1;

proxy_redirect off;

proxy_pass http://实际域名地址;

proxy_set_header Host $host:$server_port;

#proxy_set_header Upgrade $http_upgrade;

proxy_set_header X-Forwarded-Proto $scheme;

#proxy_set_header Connection "upgrade";

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}helm部署的时候push报如下错误

Error: UPGRADE FAILED: pre-upgrade hooks failed: unable to build kubernetes object for deleting hook appchart-uat/templates/alertReceiver.yaml: unable to recognize "": no matches for kind "Receiver" in version "notification.kubesphere.io/v2beta2"解决:安装kubesphere/notification-manager

分析:根据错误发现是webhook的问题,检查组件

Monitoring发现只有10个服务,少了一个[notification-manager-webhook](https://host-kslb.mh.xxx.com.cn/clusters/innovation-lab/components/kubesphere-monitoring-system/notification-manager-webhook),因此根据官方文档重新安装下1

2

3

4

5

6# Deploy CRDs and the Notification Manager Operator:

kubectl apply -f https://github.com/kubesphere/notification-manager/releases/download/v2.0.1/bundle.yaml

# Deploy default template:

kubectl apply -f https://github.com/kubesphere/notification-manager/releases/download/v2.0.1/template.yaml

# Deploy built-in language packs.

kubectl apply -f https://github.com/kubesphere/notification-manager/releases/download/v2.0.1/zh-cn.yaml删除log组件之后,提示

no endpoints available for service ":ks-apiserver:"具体错误找不到了,大概是9443端口连接不上解决:同第五个问题

流水线错误:

Error: UPGRADE FAILED: pre-upgrade hooks failed: receivers.notification.kubesphere.io "-webhook-receiver" is forbidden: User "xxx" cannot delete resource "receivers" in API group解决:xxx用户没有集群的权限,修改为有权限的用户

kubeconfig原因:会根据xxx用户的集群凭证去创建CRD,但是被拒绝了。

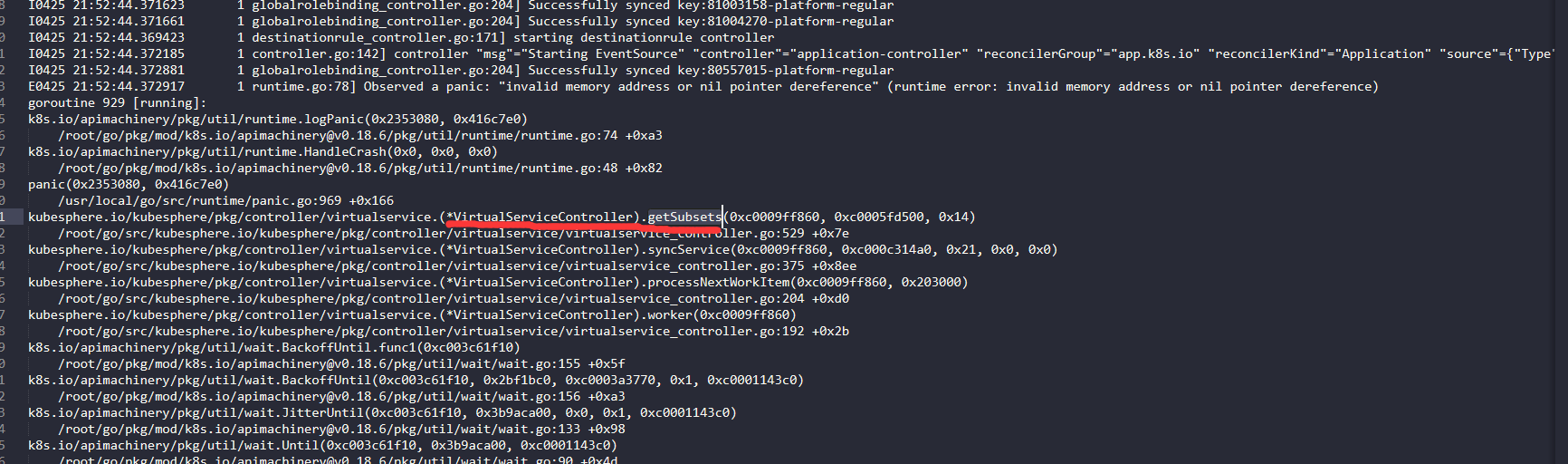

ks-controller-manager部署启动报错,不断重启,错误信息如下:

关键错误信息:

E0425 16:45:32.489641 1 runtime.go:78] Observed a panic: "invalid memory address or nil pointer dereference" (runtime error: invalid memory address or nil pointer dereference)错误定位:可在日志中看到是

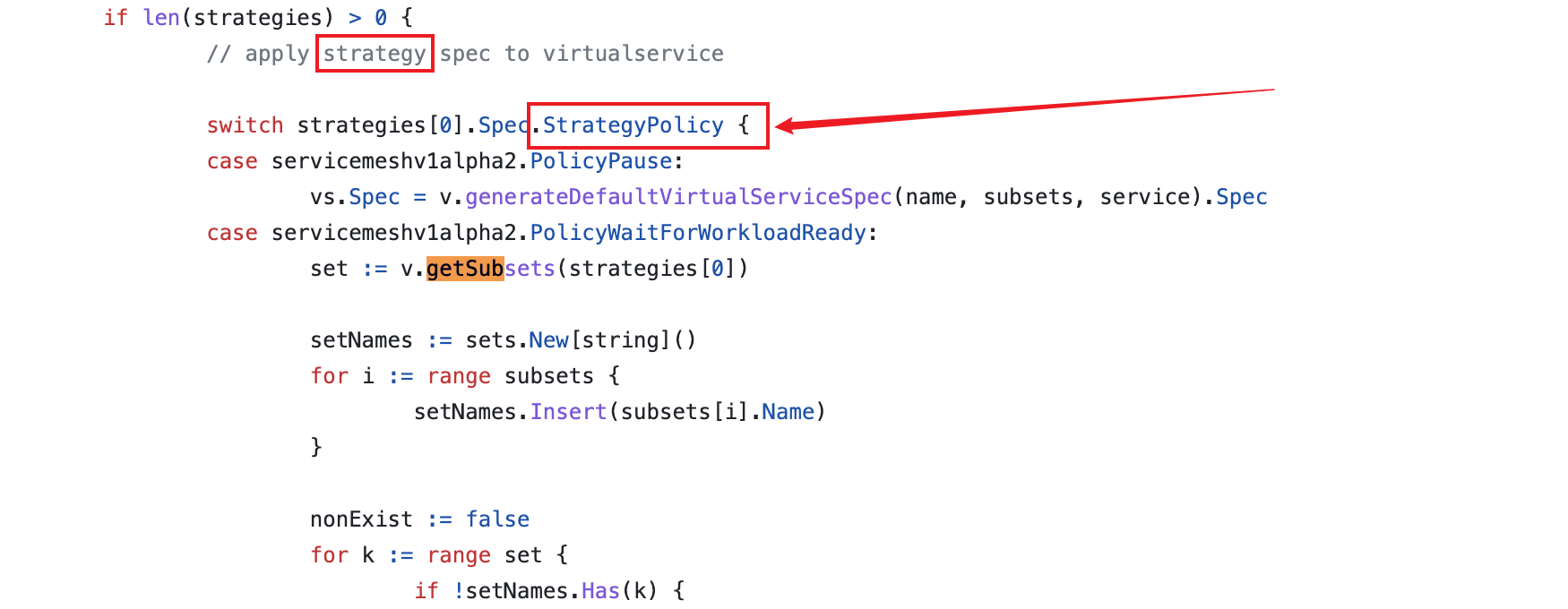

(*VirtualServiceController).getSubsets该方法报错,去kubesphere/pkg/controller/virtualservice/virtualservice_controller.go的代码里面找到getSubsets方法

可以看到是strategy应用出了问题。

解决:在自定义资源CRD,搜索

Strategy点击进去,删除可能又问题的crd资源,例如:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23apiVersion: servicemesh.kubesphere.io/v1alpha2

kind: Strategy

metadata:

annotations:

app.kubernetes.io/icon: /assets/bookinfo.svg

kubesphere.io/creator: '81006638'

servicemesh.kubesphere.io/newWorkloadName: reviews-v2

servicemesh.kubesphere.io/oldWorkloadName: reviews-v1

servicemesh.kubesphere.io/workloadReplicas: '1'

servicemesh.kubesphere.io/workloadType: deployments

labels:

app: reviews

app.kubernetes.io/name: bookinfo

app.kubernetes.io/version: v1

name: v2

namespace: dairk-test

spec:

principal: v1

selector:

matchLabels:

app: reviews

app.kubernetes.io/name: bookinfo

app.kubernetes.io/version: v1

部署配置config-sample.yaml

主要修改hosts

1 | apiVersion: kubekey.kubesphere.io/v1alpha1 |