k8s-常见问题记录

错误信息:

1

Failed to create pod sandbox: rpc error: code = DeadlineExceeded desc = failed to get sandbox image "k8s.gcr.io/pause:3.8": failed to pull image "k8s.gcr.io/pause:3.8": failed to pull and unpack image "k8s.gcr.io/pause:3.8": failed to resolve reference "k8s.gcr.io/pause:3.8": failed to do request: Head "https://k8s.gcr.io/v2/pause/manifests/3.8": dial tcp 74.125.23.82:443: i/o timeout

原因:关键信息

failed to pull image "k8s.gcr.io/pause:3.8",说明镜像拉取失败,因为k8s.gcr.io解析的都是国外ip。方案一(临时解决):

1

2

3

4

5

6

7

8#拉取镜像

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8

#修改镜像名

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8 k8s.gcr.io/pause:3.8

crictl pull registry.cn-hangzhou.aliyuncs.com/owater/cluster-proportional-autoscaler-amd64:1.8.3

ctr -n k8s.io i tag registry.cn-hangzhou.aliyuncs.com/owater/cluster-proportional-autoscaler-amd64:1.8.3 k8s.gcr.io/cpa/cluster-proportional-autoscaler-amd64:1.8.5方案二(永久解决):

1

2

3

4

5

6vi /etc/containerd/config.toml

#修改该行:sandbox_image = "k8s.gcr.io/pause:3.8"

#为 :sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8"

systemctl daemon-reload

systemctl restart containerd

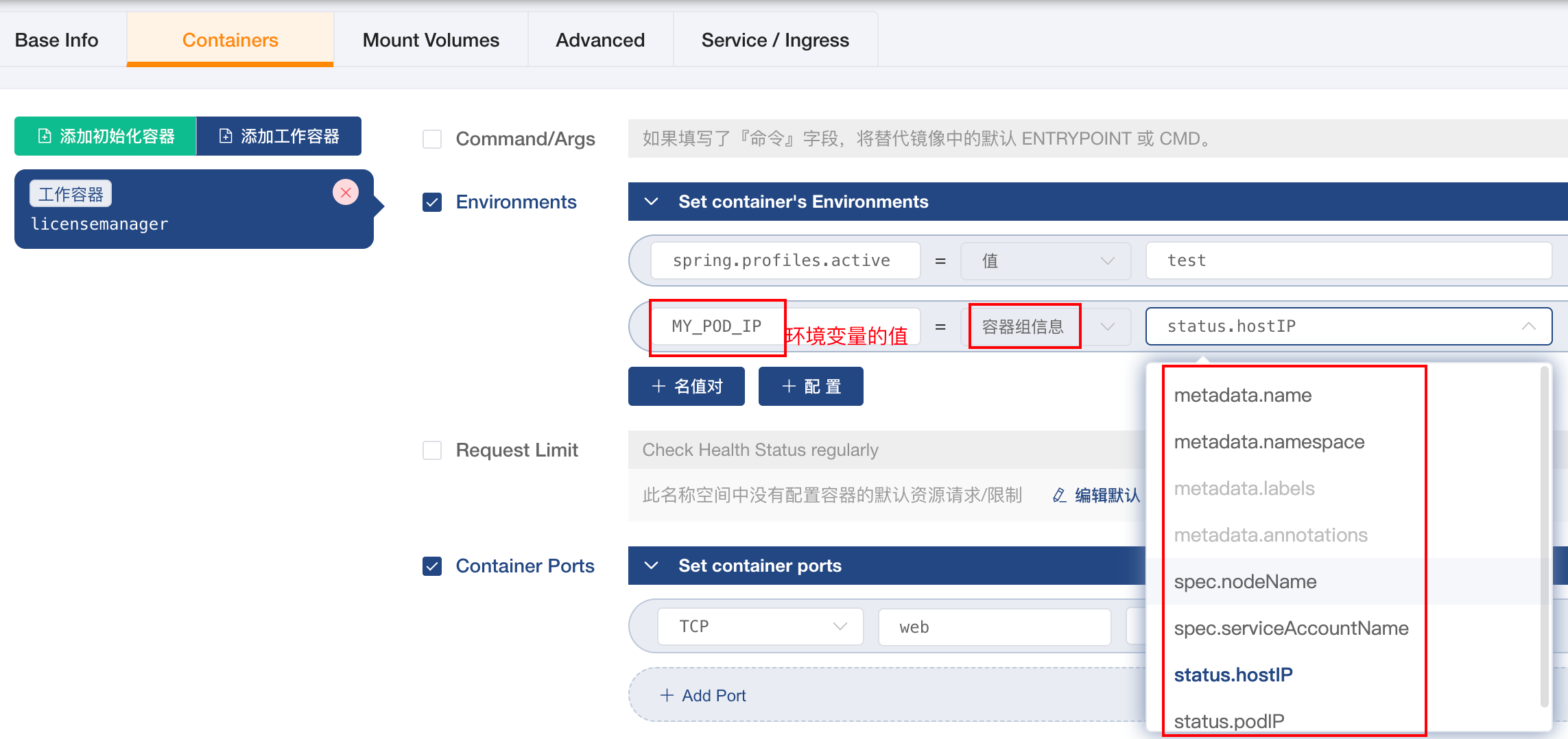

systemctl status containerd需求信息:获取k8s的节点ip

原因:解决一些服务需要知道节点id

解决:

1

2

3

4

5

6

7

8

9

10

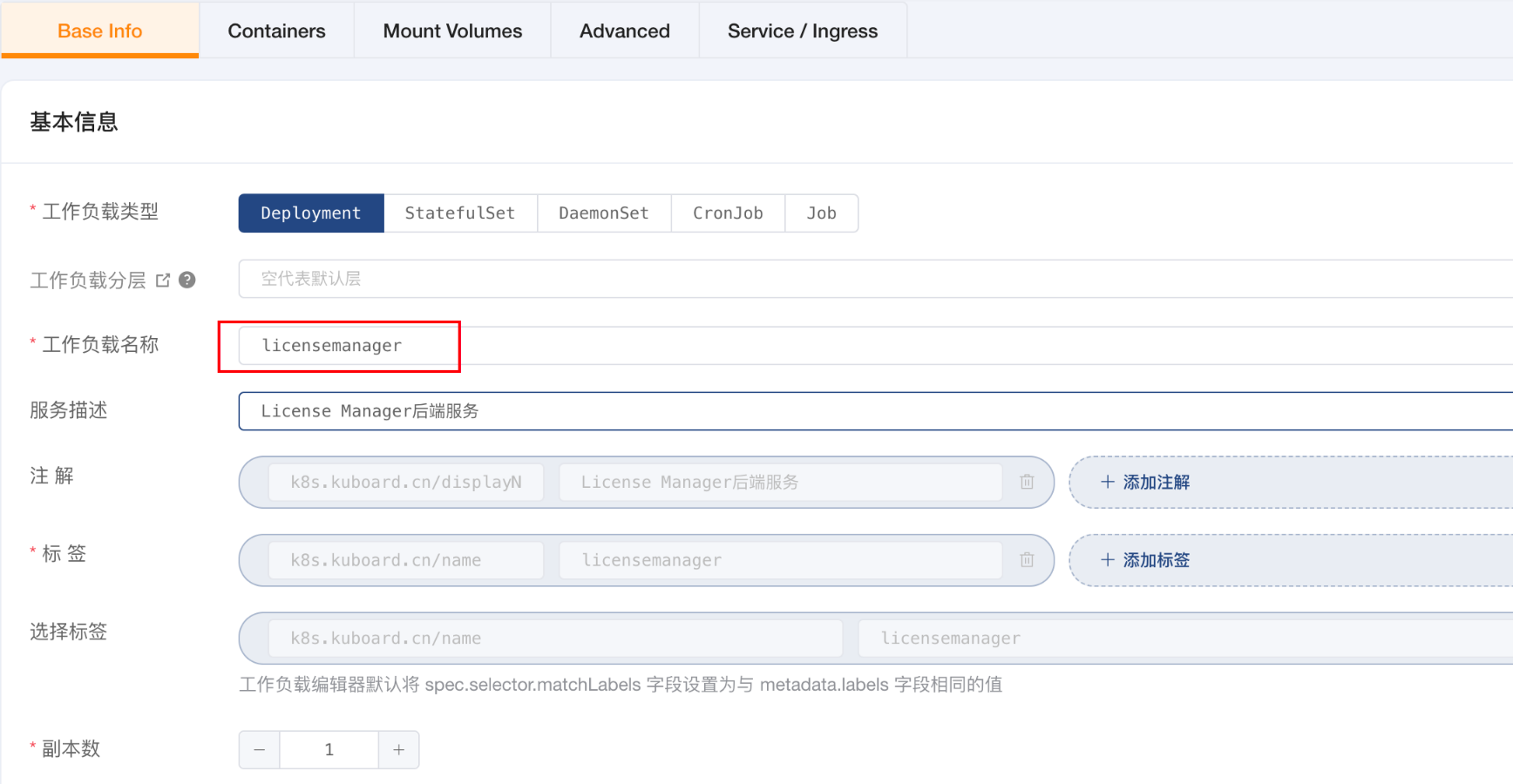

11spec:

containers:

- env:

- name: spring.profiles.active

value: test

#增加如下配置

- name: MY_POD_IP #MY_POD_IP是自定义的名字,可以修改

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP对应的界面配置:

验证:在容器里面执行env就能看到

MY_POD_IP的环境变量的值已经是宿主机的ip了